Roto++: Acelerating Professional Rotoscoping using Shape Manifolds

Overview

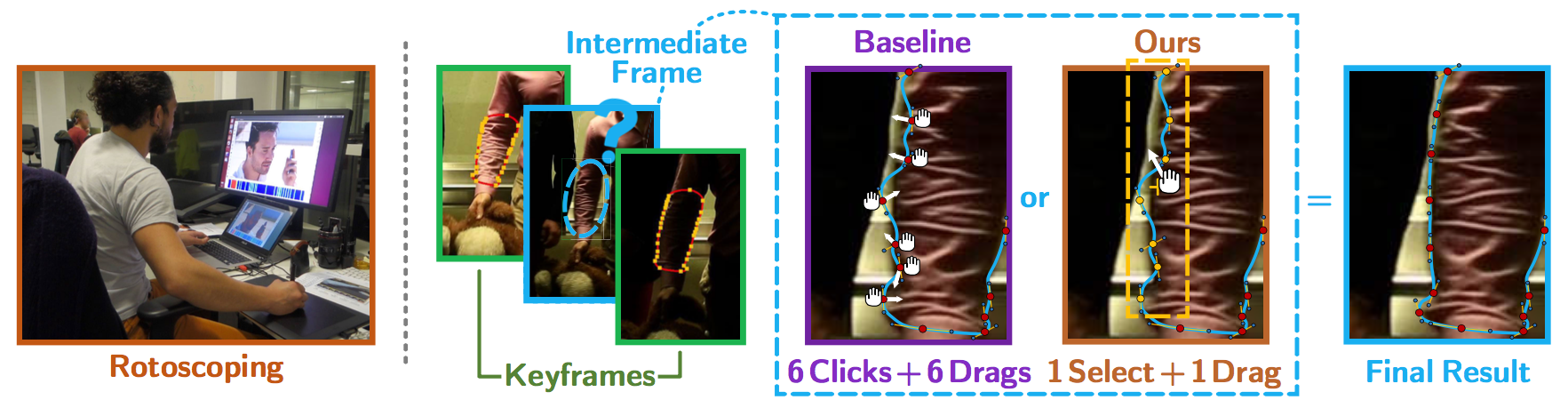

Rotoscoping (cutting out different characters/objects/layers in raw video footage) is a ubiquitous task in modern post-production and represents a significant investment in person-hours. In this work, we study the particular task of professional rotoscoping for high-end, live action movies and propose a new framework that works with roto-artists to accelerate the workflow and improve their productivity.

Working with the existing keyframing paradigm, our first contribution is the development of a shape model that is updated as artists add successive keyframes. This model is used to improve the output of traditional interpolation and tracking techniques, reducing the number of keyframes that need to be specified by the artist. Our second contribution is to use the same shape model to provide a new interactive tool that allows an artist to reduce the time spent editing each keyframe. The more keyframes that are edited, the better the interactive tool becomes, accelerating the process and making the artist more efficient without compromising their control. Finally, we also provide a new, professionally rotoscoped dataset that enables truly representative, real-world evaluation of rotoscoping methods. We used this dataset to perform a number of experiments, including an expert study with professional roto-artists, to show, quantitatively, the advantages of our approach.

Publication

Wenbin Li, Fabio Viola, Jonathan Starck, Gabriel J. Brostow and Neill D.F. Campbell,

ACM Transactions on Graphics (SIGGRAPH), vol. 35, no. 4, 2016

[pdf] [supplemental] [code]

Code and Data

The code for our Roto++ tool for non-commercial research use is available via github here. The dataset is also available via The Foundry website here.

Acknowledgements

The “MODE” footage is provided courtesy of James Courtenay Media and the rotoscoping work was kindly performed for The Foundry by Pixstone Images. All data-sets were prepared by Courtney Pryce with special thanks to John Devasahayam and the PixStone Images team for turning around a vast amount of work in such a short period of time.

The study was coordinated by Juan Salazar, Dan Ring and Sara Coppola, with thanks to experts from Lipsync Post, The Moving Picture Company, and Double Negative - Kathy Toth, Andy Quinn, Benjamin Bratt, Richard Baillie, Huw Whiddon, William Dao and Shahin Toosi.

This research has received funding from the EPSRC “Centre for the Analysis of Motion, Entertainment Research and Applications” CAMERA (EP/M023281/1) and “Acquiring Complete and Editable Outdoor Models from Video and Images” OAK (EP/K023578/1, EP/K02339X/1) projects, as well as the European Commission’s Seventh Framework Programme under the CR-PLAY (no 611089) and DREAMSPACE (no 610005) projects.