PhD Position: MyWorld PhD Studentships

We are inviting project proposals for fully funded (stipend and fees) PhD studentships available for UK students (Conditions may apply to non-UK students) commencing in January or March 2024.

The successful student will be part of the Centre for the Analysis of Motion, Entertainment Research and Applications (CAMERA) which performs world-leading multi-disciplinary research in Intelligent Visual and Interactive Technology. Funded by the EPSRC and the University of Bath, CAMERA exists to accelerate the impact of fundamental research being undertaken at the University in the Departments of Computer Science, Health and Psychology. The successful candidate will work closely work with the experts from CAMERA and with collaborators from the University of Bristol and project partners associated with the MyWorld programme.

This ambitious MyWorld project is a five-year programme funded by the UKRI Strength in Places fund. The £46m programme brings together 30 partners from Bristol and Bath’s creative technologies sector and world-leading academic institutions, to create a unique cross-sector consortium. We are searching for motivated students who wish to create the next generation of content capture and creation tools for the creative industries across film, TV, games and immersive Virtual and Augmented/Mixed Reality. This work will make use of the latest advances in Machine Learning technology, e.g. generative AI, trained on datasets of world-leading quality obtained via our unique CAMERA Studio facilities.

The project proposal should be within the key themes of:

- Automatic capture and rigging of high-quality avatars/digital doubles including biomechanically accurate dynamics

- Efficient capture of cinematic quality 4D volumetric data including ML/AI tools to allow artistic control and editing

- Next generation of virtual production including lightfield synthesis to recreate truly real-world lighting on set

The following section details the brand-new equipment available that you will be given priority access to. This work will be interdisciplinary and collaborative and will span research across computer vision, computer graphics and machine learning. Candidates can come from a background in any but should be motivated to expand and learn techniques and theory from across the disciplines; all areas will build on firm foundations in maths and programming.

The CAMERA Studio

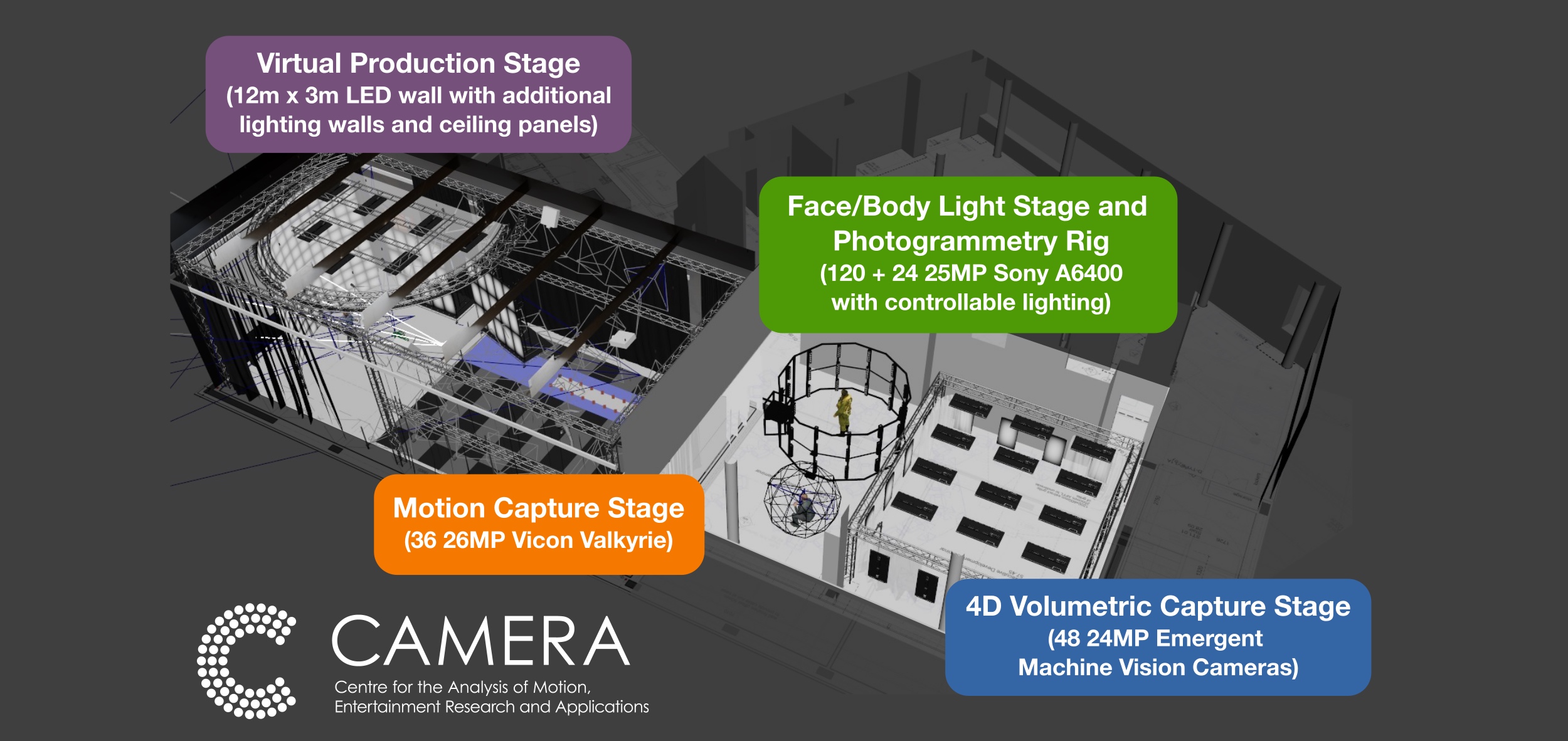

As part of our recent expansion we are very excited about the opening of the new CAMERA studio which uniquely comprises four state-of-the-art capture stages as illustrated above:

- Motion Capture Stage: Our marker based motion-capture facility is centred on 36 26MP Vicon Valkyrie cameras that can be used to capture highly detailed movement (e.g. individual fingers) as well as group interactions (many participants) in the large capture volume. We are interested in capturing truly realistic motion: this could be for training or validating markerless capture systems or biomechanical analysis. We have force-plates to capture ground-reaction forces and suspension harnesses to simulate constrained motion (e.g. movement under Lunar or Martian gravity).

- Face/Body Light Stage and Photogrammetry Rig: The face capture system comprises a light-stage from Esper with 25MP cameras and high-speed lighting control. The photogrammetry rig allows us to perform body reconstructions, e.g. for creating avatars. Combined, they allow us to capture cinematic quality datasets, e.g. to investigate training data for automatic avatar creation and rig construction suitable for high-end visual effects.

- 4D Volumetric Capture Stage: This new room scale stage allows for free-form 4D capture or lightfield capture using 48 24MP Emergent machine vision cameras. This will allow capture of high-quality, dynamic datasets and we are interested in using new view synthesis to provide virtual camera paths as well as new methods for artistic control and editing of volumetric data (e.g. allowing NERFs or similar to be used in immersive AR/VR productions or in visual effects).

- Virtual Production Stage: This brand new 12m by 3m LED wall will allow us to develop the next generation of capture and editing tools for media production. After initial success with projects such as ILM’s work on the Mandalorian, there is an increasing demand for virtual production. Access to a state-of-the-art background wall plus movable front lighting walls and ceiling panels will let us work on the next generation of production and visual effects pipelines. We are particularly interested in generation of the highest quality capture, e.g. recreation of lightfields for lighting accuracy, and to provide the most intuitive creative control for the director, actors and crew.

You will work alongside the six members of our excellent studio team who have long-standing experience across both technical and creative/artistic aspects of the studio. Collaboratively, they will assist with capturing exciting and challenging new datasets or showcasing our research with internationally leading studios and award-winning creative teams (e.g. DNEG and Aardman Animations).

CAMERA and MyWorld Partners

There are many collaboration opportunities with our external partners across the CAMERA centre and the wider MyWorld programme. Examples include the Foundry, a world-leading provider of tools to the Visual Effects (VFX) and Post-Production industry and DNEG the internationally acclaimed Post-Production house with numerous accolades including Academy Awards for Dune, Tenet, First Man, Blade Runner 2049, Ex Machina, Interstellar and Inception.

Wider Research Group

In addition to working with the CAMERA centre in Bath, the MyWorld research project will include regular collaboration with researchers at the Universities of Bristol, Bath Spa and the West of England (UWE) including technical and creative collaborations. You will also join the vibrant Visual Computing and Machine Learning and AI groups at Bath. The VC group comprises around 30 doctoral students, 10 post-doctoral researchers and 8 academics and presents many opportunities for collaborative work and shared publications.